Questioning the Ethos of Linux

It appears that I was struck by Dunning–Kruger effect while writing this article. Many agree that the article written below constitutes misinformation and not a well-informed opinion on Linux.

As I am against deleting information, it will still remain on this website, but keep in mind that most of what you are about to read is probably incorrect.

Introduction

In recent years, Linux and open source software in general have gained a decent amount of popularity. They have been marketed as more privacy-focused and secure than their proprietary counterparts.

I believe that the ethos and modern attitude toward open source project is deeply flawed. Furthermore, I find some of the culture around Linux to be of noticeable suspicion.

Most of the content of this article is going to focus on how people treat Linux rather than the technological aspects of it - although I do touch on some notable security vulnerabilities.

During my research I was graciously helped by some members of the RuneScape Preservation Unit - notably Graham and Skillbert. Their exact contributions will be cited in the "Credits" section.

Preface

This document will be a critique of Linux/FOSS in general rather than for a specific distribution or software.

This document also assumes a basic familiarity with Linux and some of its concept such as:

- Linux distributions

- Package managers

- The Linux kernel

- Open source code

- Compiled code

- Distro hopping

- Command line / terminal

Despite what this document might imply, I am NOT a cybersecurity expert. Take everything described here with a grain of salt. Those are just the opinion of someone who has been using computers for 13+ years and nothing more.

Background

I figure I should explain what my personal history with Linux and operating systems in general is.

I use Windows 7 as my primary OS and thus use it daily. I personally heavily dislike later iterations of Windows as they take control from the users in the name of security. The most glaring example of this is how Windows 10 forcing you to install updates.

As for Mac OS, I briefly exposed to it when I studied graphic design. I am not a fan of how closed this operating system is - it appears even more restrictive than Windows 10. That being said, as this was in a school environment, my exposure to it was quite limited and my opinion is therefore incomplete.

Linux Elive, a distro I experimented a lot with that features a gorgeous Frutiger Aero style interface.

Regarding Linux, I started messing around various Linux distributions inside virtual machines around a year ago. While I am relatively new to the Linux world, I did watch plenty of video relating to Linux in the years prior, most notably those of Mental Outlaw.

I am not going to claim that Windows 7 is better than Linux - especially from a privacy standpoint. I do, however, think that Linux does have some flaws that need to be discussed.

Furthermore, I would like to state that in general, privacy is not my primary concern - control is.

That being said, control and privacy are very connected when it comes to technology. I believe I am informed enough to make a critique of the narrative around Linux as I have followed the "privacy scene" since 2017.

I am a citizen of Canada, but some sections of this article is going to focus on the government of the United States of America as it has a bigger reach and has known past activities related to the points I will be making.

Context

For historical purpose, I will describe the current context surrounding Linux as of December 2022.

There are three "brands" of operating systems: Windows, Mac OS and Linux distributions.

In the last decade, it has been shown that corporations and governments engage in mass data harvesting and surveillance. As Windows and Mac OS are proprietary, everyone now believes that those operating systems are spying on its users - this view is almost universal among regular netizens.

As a result, a significant number of people have started looking for tools and services that offer privacy.

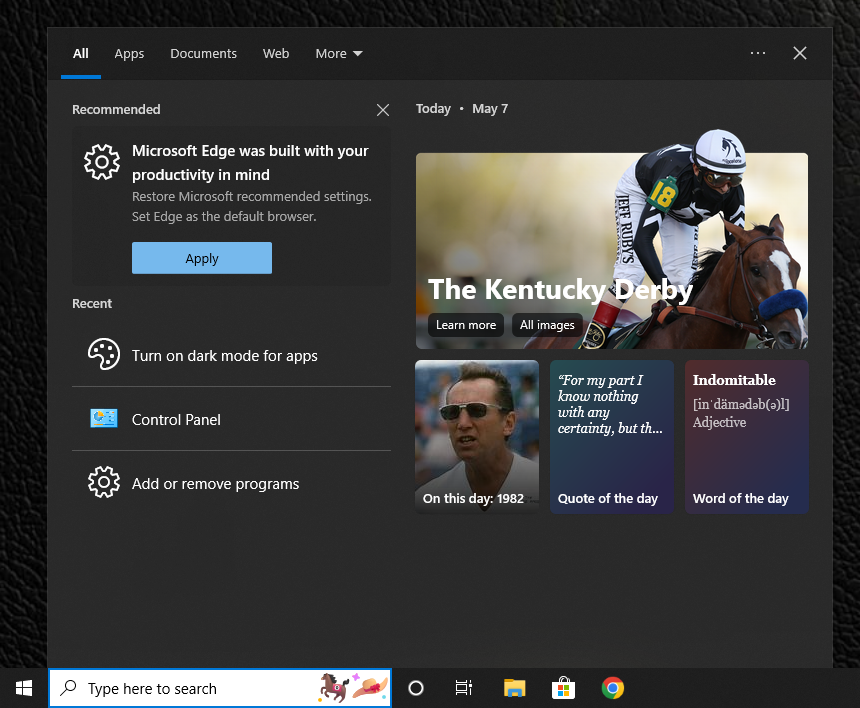

Furthermore, Windows and Mac OS both had controversial user interfaces changes. On Windows 10 for example, a "doodle" (vector image) was added on the search bar that is located on the task bar.

Some Windows 10 users do not like the fact that their search bar contains an image that changes on a daily basis.

As a result, Linux had a surge in popularity in recent years. Many believe that it is inherently more secure and private as it is open source.

Users that decide to install Linux often go "distro hopping" or stick with a distribution that resembles the most to the operating system they came from. For example, former Windows users tend to gravitate toward Linux Mint.

The act of "distro hopping" consist of installing many Linux distributions until you find one that you like. This is generally an encouraged behavior.

Many Linux distro have been trying to appeal to new users or market themselves as a privacy tool. A significant number of Linux users believe that Linux is not ready for the average person due to a lack of hardware support and driver issues that plagues most Linux distributions.

Furthermore, there is a general belief that software (and by extension the operating system) must always be up to date as it supposedly makes the computer more secure. Users who use older version of Windows, Mac OS or Linux are generally heavily criticized.

As the writing of this, Linux distribution are regarded as the best (and only) real solution to have privacy on a computer.

Open Source is Flawed

The common refrain regarding open source projects is that they are very secure as everyone can check the source code. I do, however, have a few problems with this line of thinking.

While most applications on Linux are open source on Linux, they still need to be compiled. As a result, a Linux distro is generally composed of compiled executable code.

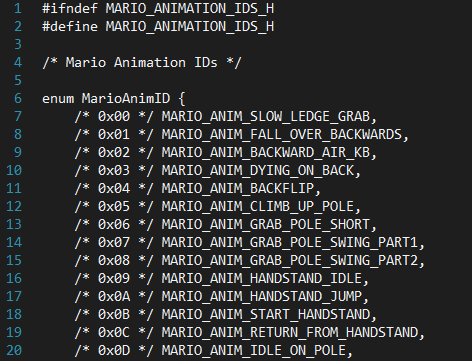

While it is possible to decompile a compiled program - a famous example being the Super Mario 64 decompilation, it is not an easy thing to accomplish.

A code snippet from the Super Mario 64 Decompilation

While it may be possible to retrieve the code using some utility, interpreting it is a whole different story. In all likelihood, comments and debug symbols will have been stripped out, making the task very difficult.

There is also the risk of distros having a malicious clone of a well-known app. Due to the nature of open source software, this means that anyone could easily make spyware that deceive people based on the reputation of a well-known project.

Some people also theorize that the compiler used to compile applications could be hijacked - meaning a well-intentioned developer could end up producing spyware due to the compiler used to compile his program.

This means that the users also have to trust that the compiler has not been compromised. This concern goes all the way back to 1984 when Ken Thompson demonstrated it was possible to inject a virus in a compiler - a virus that was able to inject a backdoor into code and wipe any trace of its existence.

As a potential solution to this, some people have suggested the ideas of reproducible builds and binary transparency.

Even if applications existed on a distro in non-compiled form, users would still need to read and understand the source code to be fully sure that the code is not malicious.

I assume that most Linux users tend not to read the source code of the applications they use, especially if they distro hop often - the main issue being that this takes a significant amount of time and knowledge to properly do so.

As a result, I believe that the "everyone can read the source code" argument for open source is not valid.

The main counter-argument for this point is that users can compile the application themselves should they wish to. While this eliminates the need for trusting compiled applications, I still argue that there is a significant time investment and knowledge barrier.

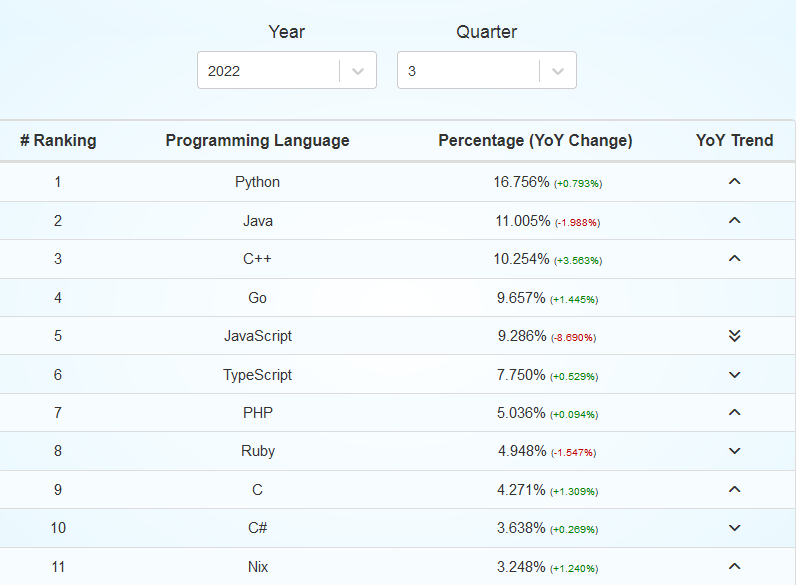

This is also not helped by the fact that there are many programming languages out there. According to this site, around 50 programming language receive a decent amount of attention on Github.

If you only account for the popular ones (+1%), this still leaves us with 16 different programming languages.

In other words, to properly analyze the source code of most software you would encounter, you would need to learn to read, understand and compile in Python, Java, C++, Go, JavaScript, TypeScript, PHP, Ruby, C, C#, Nix, Scala, Shell, Kotlin, Rust and Dart.

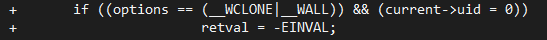

There are still problems even if you know how to read the source code. Sometimes, malicious code can be hidden in plain sight. An example of this can be found in an attempt to add a backdoor to the Linux kernel in 2003:

Those two lines of code would have created a backdoor that could grant root access by setting the User ID to 0. While it the vulnerability could not have been remotely activated, it still shows how a few malicious lines of code can be a cause for concern.

Some speculate that a well-placed "typo" could be enough to create a buffer overflow exploit, but I did not find any evidence for this possibility during my research.

Another counter-argument is that users can verify compiled code using a GPG signatures.

While I do think it is a good and effective way to check the integrity of a compiled program, it does require the user actively make verification checks - something I doubt most Linux users does.

This also requires that the developers provided the GPG signature in the first place. If they didn't, you have to compile the application yourself to verify the integrity - which can be problematic for the reasons listed earlier.

As far as I know, those signatures tend to be stored online (mainly on Github pages), meaning that during a power outage, you would be out of luck.

Another point I want to address is the fact that Linux distributions tend to be open source. This means that the source code for a compiled program is likely available somewhere online.

For reasons aforementioned, I find it improbable that most users tend to look into the source code into the distro they are using - especially if they are distro hopping.

As there is 600+ active Linux distro, I believe that the majority of them never had their code reviewed by a third party - let alone from common Linux users.

That being said, I would suspect that popular and mainstream Linux distribution would fare better on this point as they naturally get more scrutiny.

Software Delivery

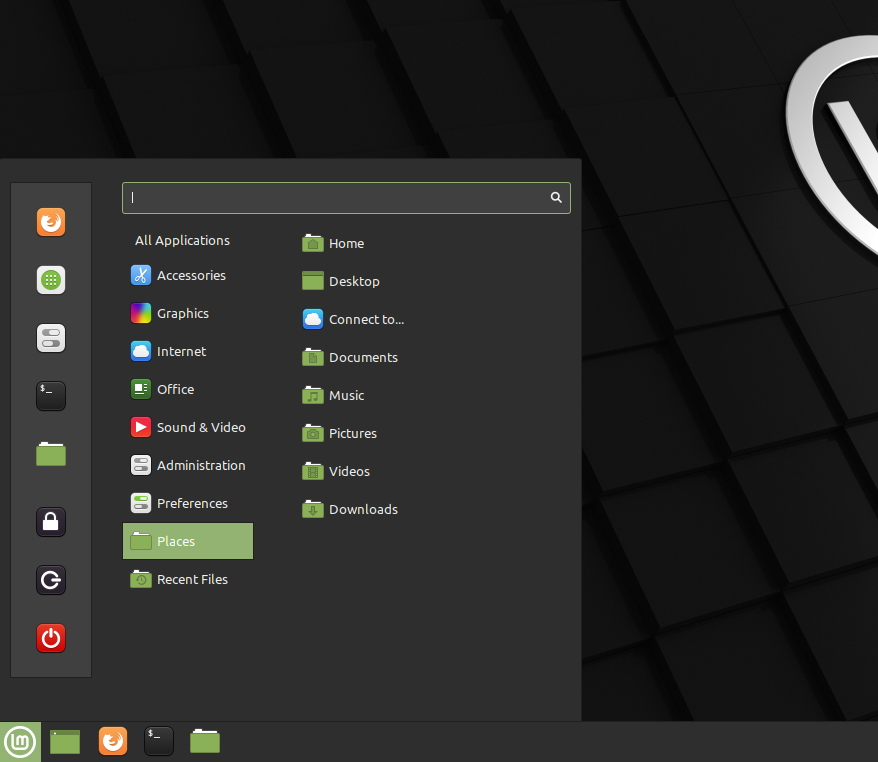

On Linux most people obtain their software either through a package manager or by typing a command into the terminal. Users also have the option to manually download software from the internet, but this option is not very popular.

I always found it ironic how the Linux community often speaks of decentralization but love their package managers - centralized repositories of software.

I believe that obtaining software through a package manager or the terminal is a bad security practice.

The underlying application or a server used by a package manager can be compromised. There has been documented instances of security flaws within those program in the past.

While detecting foul play can theoretically be done by analyzing network traffic with tools such as Wireshark, most users will likely simply trust the package manager.

I think that true software decentralization is the way to go. Programs should be hosted on multiple mirrors but not downloadable within an application or the command line.

My reasoning is that multiple independent mirrors are unlikely to all be compromised at the same time. Ideally, the end user should download a package from at least 2 distinct sources and verify their integrity using GPG signatures.

While I am aware that some package managers probably do this already, my main concern is that they can theoretically fake validation if they are compromised - it constitutes a singular attack vector.

One might argue that package manager repositories are looked after by a significant number of people and thus secure. While this is probably the case, I still believe that the use of package managers cultivates a bad security habits for the end user.

After using a package manager for an extended period of time, they will become conditioned to expect their software to come from a singular source. This especially dangerous for those who are distro hopping as they may be exposed to less secure or compromised package managers.

Software Updates and Dependencies

Software nowadays has a lot of dependencies. Due to values stated in the "Context" section, people are incentivized to update their software and dependencies as soon as an update is out. This is usually done using the command line or a packager manager.

A lot of software relies on ImageMagick to run (comic source).

Some software scans for dependencies update and propose the end user to update it through the terminal.

It is my belief that downloading software updates automatically is a bad idea. A significant amount of open source projects are only run by one person. While they may be trusted, this can change at any day.

There is known instances of trusted open source projected being sabotaged by their own creator.

An example that comes to mind is how node.ipc pushed an update that wiped the hard drive of users using a Russia or Belarussian IP address as part of a protest against the Russian-Ukraine war.

According to this Github page, at least 105 applications used node.ipc at the time when the attack was discovered. This means that all those applications had the potential to be infected with malware if they decided to update the dependency.

Another similar example is the colors and faker incident in which the developer sabotaged two npm libraries to propagate a political message. According to the linked article, color.js was downloaded 3.3 billion times in since its creation and was used in more than 19,000 projects.

In other words, 19,000 projects had the potential to be sabotaged due to the whim of a random person on Github.

I believe that software should be shipped with a known stable version of the required of the dependencies for the reason mentioned above.

The main counter-argument for this is that software needs to be updated as to patch potential 0-day exploits.

I do agree that some software should be updated - however it is important to keep in mind that not all software are equal when it comes to security risk.

For example, a utility that would convert .webp files to .png would likely not connect to the internet at all, meaning it is very unlikely that it would be used as an attack vector. Unless really required, I think a dependency like this should be left alone.

As for more critical software, I believe that manually downloading updates is the best course of action.

When a user will go look for an update from a web browser, they are more likely to detect foul play than if they download it straight from the command line. My reasoning being that that the users may encounter news article or user complaints before proceeding to update their application or dependencies.

I believe a better practice would be to simply let the user know that a new update is out without letting them download it directly.

An argument can be made that forcing manual updates would lead to laziness and thus negligence of security.

I do agree that this is the most likely outcome to happen. Ideally, there should be a way to incentivize the users to do so.

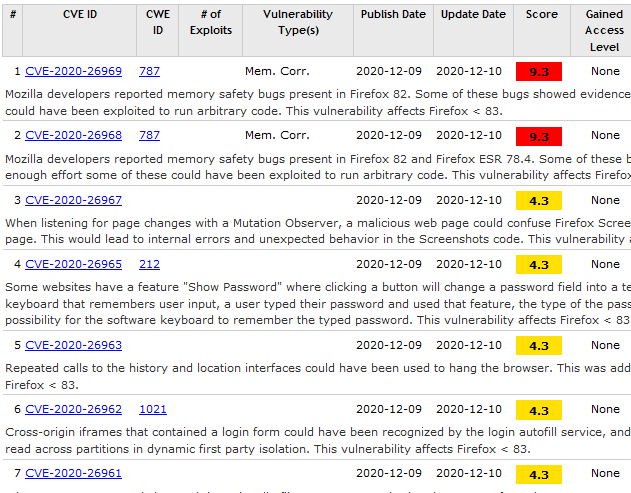

For common attack vectors such as web browsers or web-hosting tools, the best course of action would likely be to require the user to check a third party CVE database before actually using a high-risk application.

This is a somewhat difficult problem to solve because it users would likely just ignore the warning given a long period of time.

Ideally, something similar to Two-Factor Authentication would be created - meaning an active input of the users would be required (the code being hosted on the database).

There should also be a way for the user to bypass it an offline environment, which only complexifies the problem.

Regardless, I do not know how practical and realistic such a solution would be, so I will linger on this point any further.

Government Involvement

As shown in the "Context" section, governments have a vested interest in collecting data. Assuming that Linux is truly private, this begs the question: Why is Linux allowed to exist? The main reason is probably that governments also uses Linux.

In other words, the existence of Linux is beneficial to them. Due to the nature of open source, they can take an existing distro and modify it to fit their needs. This is undoubtedly more efficient than spending a significant amount of manpower to build their own Unix-based system from scratch.

I therefore theorize that making Linux illegal would be too inconvenient for them. That being said, Linux is probably still "problematic" in the eyes of the feds.

If they cannot outright eradicate it, the next best course of action would be to take control of it or at least influence it.

While one might argue that the nature of open source makes Linux impervious to governmental influence - or at least makes it harder, I beg to differ.

The code and the systems might be secure, but developers can be influenced or even bribed. There are known cases of this.

The NSA is known to have interfered with an encryption standard. They ensured that the Data Encryption Standard had a 56-bit key length instead of a 64-bit one.

Many people think that this was because they had the hardware to crack a 56 bits key at the time - this was around 1975.

Another more recent example was the interference with the Dual_EC_DRBG algorithm around 2006 as revealed in 2013 by Edward Snowden.

It is not hard to imagine that there might have been more interference since then.

In the past it has been shown that the government has been taking special interest into those who seek privacy.

A notable example of this was the Anom Phone. This was a smartphone running a custom version of Android and was marketed as a privacy phone. It was created by the FBI to catch criminals - all data on the phone was recorded and uploaded to a governmental server.

I think it is probable that some distros designed as honeypots (governmental traps) out there.

Furthermore, I believe that the USA government has influenced many standards of the Linux community - their end goal being to alienate the average computer user from using Linux distributions and stifle progress - something that will be discussed in the next section.

Control Through Freedom

Linux in itself is not monolithic but it can be argued that it is treated as such by the community. From what I can see, specific distros are rarely recommended unless someone specifically ask for suggestions.

There is a prevalent view that all Linux distributions are equal and that no distro is better than the other because it all boils down to personal opinions.

I personally find this view to be dangerous.

As mentioned earlier, there are 600+ active distro. While one might argue that they might be equal in spirit, I doubt they are all equally secure.

It should also be noted that Linux has no major competitor in the open source space. While I am aware that ReactOS, Qubes OS and MenuetOS are alternatives that exist, they are mere shadows compared to popularity of Linux.

I find this to be suspicious. One might argue that the government might have stifled potential Linux alternatives as a form of controlled opposition.

It is well known that people will only use an operating system that has a strong software library. An example of this can be seen in the failure of the Windows Phone which had a severe lack of third-party applications.

As a result, any potential alternatives to Linux are not viable for the common users and most tech enthusiasts as they lack software. There is also a bigger security risk since there is less eyes on those projects than on Linux.

Some will say that this probably just the natural result of the difficulties of making an operating system and modularity of Linux.

I agree that this probably the case - I have never made an operating system myself but I imagine it not the easiest thing in the world to do.

That being said, the result is still the same regardless of the cause. The feds have all the reasons in the world to interfere with Linux as it is the only viable option that can be a cause for concern.

Another aspect of Linux I find suspicious is the large number of distributions out there. It heavily reminds me of the "divide and conquer" tactic. I believe that the large amount of distro is a way to divide the resources of the open source community.

If there were only 3-4 competing Linux distributions, chances are they would be better as more resources would have gone into them. I think that Linux would have been in a better position today in regard to progress and security.

If my theory is correct, this is a very effective tactic. People would have been tricked into thinking that creating their own distro is an expression of freedom while all they would really do is weaken the open source community.

There would also be the benefit of fueling tribalism.

Whatever this is by design or not is irrelevant. My point is that people (especially developers) are split across 600+ distros, across 50 programming languages and across around 54 million active projects.

It is undeniable that the Linux/open source community is heavily divided.

The only saving grace of this situation is that it is highly unlikely that the most majority of projects are compromised as doing so would require an immense amount of manpower.

That being said, this effectively means your only option is to trust people - which is not ideal, to say the least.

Target Demographic

In the last years, many Linux distro have marketed themselves as new user-friendly. This is especially true for the distributions that claims to be privacy focused.

I find some of the modern marketing around Linux to be suspicious. Especially in how they use the minimalistic and Alegria art style.

I believe that this might be an attempt to discredit Linux in the eyes of the average computer user.

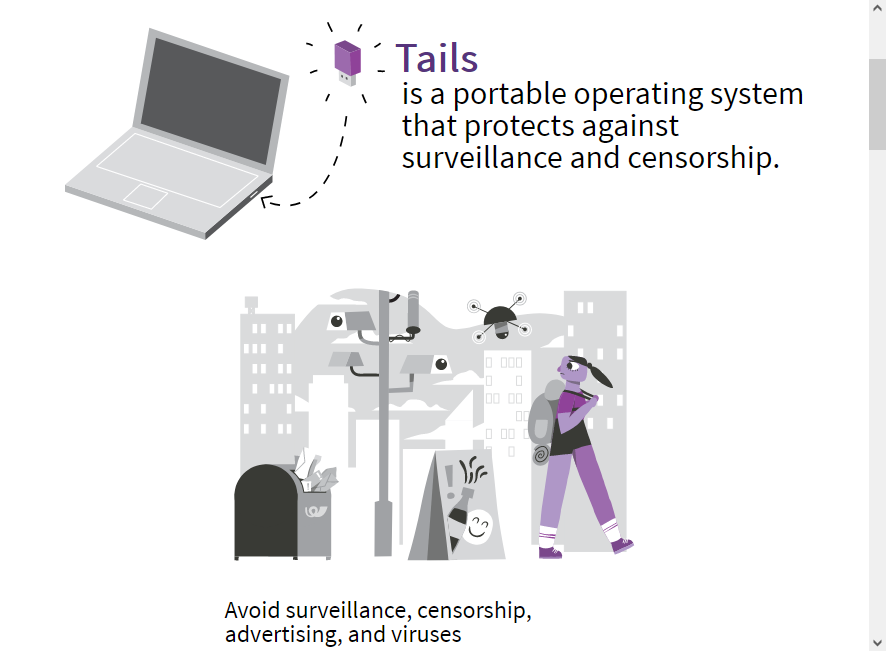

An example of this can be found on the TailOS website. The art style used there is called Alegria - also known as Corporate Memphis. As the name implies, it is heavily used by the marketing of big corporations and is almost universally hated.

So this begs the question, why would a Linux distro would use imagery heavily associated with the big tech companies?

The most probable explanation is that they try to appeal to a wide audience and try to be as inclusive as possible.

Another similar example is how Linux Mint has adopted a minimalist interface resembling the one found on Windows 10.

Granted, it is generally very easy to change the UI on Linux. But as we can see, they still try to appeal to the common computer users.

As the writing of this, Linux is absolutely not ready to be in the hand of technology illiterate people. The main problems are the lack of hardware and driver support.

Linux is still a far cry from Windows and Mac OS as it is far from having an "it just works" default state.

I believe that there might be a possibility that the current popularity of Linux might be artificially created by the government - at least how Linux has been branding as a privacy tool that is usable for the common user.

If an unexperienced user tries Linux, they are likely to run into some issue and go back to Windows or Mac OS. As a result they might be alienated from it. They do say that the first impression is what matter the most.

The main counter-argument for this is that this is just the result of the Linux distributions trying to widen their audience.

While this is likely the case, I still believe that they are shooting themselves in the foot. An unexperienced computer user will likely not understand why Linux does not work on their machine, all they will remember is that Linux is a buggy and unstable mess.

I am by no means against the democratization of Linux, but I still think it is not ready yet.

The only real solution to this problem in the current context would be to ship computers with Linux on them - meaning the distro would have been confirmed to work on a specific hardware configuration beforehand.

The problem is that most computer manufacturers already have deals with Microsoft. While some computers do come pre-installed with Linux, they are still a rarity.

Linux as a Lifestyle

To anyone familiar with the Linux community, it should come as no surprise that some people have adopted the usage of Linux as a part of their identity.

As a result, I believe that a significant number of Linux users have developed some kind of unhealthy blind faith when it comes to Linux and open source projects.

While measuring faith is a difficult task, many argue that Linux has become some kind of religion - including Linus Torvalds himself.

Regardless, it is clear that many people trust Linux - that much is obvious by the sheer amount of attention it gets.

Many will argue that what I am describing is not blind faith but rather simple trust in open source projects maintainers.

As per my arguments above, security on Linux is ultimately in an uncertain state.

It is my belief that the best course of action is to simply assume that everything is compromised and thus assume the worse case scenario.

My reasoning is that such behavior is that trusting a piece of software might turn out to be a bad idea. On the other hand, if you distrust a program that turns out to be secure, you haven't lost anything. As they say, better be safe than sorry.

Synopsis

The following is a list of the argument I just made. If you haven't already, I heavily encourage you to read the full text to understand my reasoning.

- You generally can't easily decompile compiled applications on Linux.

- Distros may have malicious clone of well-known applications.

- Compilers can theoretically be hijacked.

- The "everyone can read the source code" argument is not valid.

- Even if you can read the source code, vulnerabilities can be difficult to spot.

- The kernel could theoretically be compromised.

- Distro hopping introduces a major security risk.

- Software, updates and dependencies should NOT be downloaded automatically.

- Software should have their integrity verified from multiple mirrors.

- Downloading software using the command line and package managers is a bad practice.

- Software can be sabotaged by their own creator.

- Not every software should be updated.

- The USA government has a vested interest to control the progress of the Linux community.

- The NSA has interfered with some standards in the past.

- The FBI is interested in individuals that seek privacy.

- Some Linux distros are probably honeypots.

- All distros are not equally secure.

- Linux has no major and viable open-source competitor

- The large amount of distros might be a tactic used to divide the resources of the Linux community.

- The Linux community is heavily divided.

- Many modern distros targets new users, perhaps by the feds' design.

- Linux is not ready for new users.

- New users will likely be alienated from Linux.

- Linux has become a lifestyle.

- Most Linux users probably trust Linux and open source projects way too much.

- It is best to assume that everything has been compromised.

Closing Thoughts

As I argued, Linux is not that secure when all things are considered. Thankfully, there exists some ways to mitigate security risk and privacy concerns.

Recommendations for the End User

If you seek piracy, then spyware should be your primary concern. I would recommend having a separate Linux machine to access the internet, the reason being that if your main machine is compromised, it will not be able to send collected data without an internet connection.

In addition, I would also avoid machines with Bluetooth and infrared connectivity. You should ideally ensure that the machine cannot physically make those connections.

While I argued that spyware might evade scrutiny, destructive malware is more likely to be spotted as it is more visible by nature. As such, I would recommend that you stick with popular open source projects and distros.

When downloading software, you should do so manually. If you can, download it from at least 2 different sources and check if both package match on a binary level. You should also avoid package managers and downloading program straight from the command line.

Furthermore, I would advise you to only use a live preview on your main machine. As the operating system only temporarily exists in the RAM, this means it is impossible for you to leave unwanted data on your hard disk.

You should also encrypt your data. As the writing of this article, the Advanced Encryption Standard is considered a standard when it comes to encryption.

That being said, the USA government was investigating how to break it in 2014. As it has been nearly a decade since this leak, they may have acquired the technology to crack it since then.

I not fully sure what the ultimate method for data encryption would be. My bet would be some kind of personal data randomization layer on top of the already encrypted data, but I am no cryptography expert, so I cannot give any advice here.

You should also keep encrypted backup of your files on multiple storage media to avoid losing anything as flash storage tend not to have a long lifespan.

Recommendations for Developers

For reasons explained earlier, allowing the update to automatically update a program or dependency is a bad idea. In an ideal world, the end user should only be informed of an update.

I would also recommend shipping a program with known stable versions of the dependencies as to prevent supply chain attacks.

Furthermore, I believe that a local copy of the GPT keys should be downloaded on the user's machine as to allow him to perform integrity checks in an offline environment.

Credits

The individuals listed here only gave me information regarding Linux. They probably do not share the same opinions as me as they did not see this document before its publication.

Graham informed me about compiled applications, reproducible builds, binary transparency, The Ken Thompson Hack, compiled applications on Linux, the colors-faker incident, NSA interference with DES and the 2003 Linux kernel backdoor attempt.

Skillbert informed me about well-hidden exploits in source code, the possibility of a typo resulting in a buffer overflow and Dual_EC_DRBG.

Written by manpaint to 17 December 2022.